Latent semantic indexing: Why marketers don’t need to worry about it

What Is latent semantic indexing and does it matter for search engines? Understand how it differs from NLP and what semantic optimization tools to use.

With additional contributions from Zoe Ashbridge

Whether or not Google uses latent semantic indexing (LSI) and LSI keywords in its ranking algorithm is debated among SEOs.

Google representatives have publicly stated that Google does not use LSI keywords as a ranking factor.

So why is it still debated?

Probably because it says LSI keywords when it should say semantic keywords. After reading this guide, which covers everything you need to know about LSI keywords and the role of semantics in search, you’ll see how easy it is for this to happen.

As you read these vital sections for context, remember: Google has said it does not use LSI keywords. So what does it use? More on that in a bit.

What is latent semantic indexing?

Let’s begin with what LSI is and the mathematics behind it.

Latent semantic indexing is an information retrieval technique and a mathematical method for analyzing large datasets to understand the closeness of words and concepts. It lets information retrieval systems understand connections between words and how they fit into concepts.

In other words, let’s say you search for “healthy eating.” A document might not use the exact phrase “healthy eating” but instead uses terms like “balanced diet,” “nutritious meals,” or “whole foods.” These terms aren’t exact synonyms, but LSI sees them as related. It’s because they often appear together in documents about nutrition, even if they weren’t in the original search.

LSI analyzes co-occurrence patterns of words across multiple documents (in simple terms, how often words appear together in different documents), identifying latent structures beyond simple keyword matching.

For example, it groups related terms like “automobile” and “car” based on their frequent use in similar contexts. That helps systems better understand the overall meaning of the content, especially in large-scale information retrieval.

Developed in the late 1980s, latent semantic indexing marked a breakthrough in addressing two significant challenges in text search and information retrieval: polysemy (where a word has multiple meanings) and synonymy (where different words share the same meaning).

- Polysemy: bank your money, or river bank

- Synonym: bank and vault

These issues often lead traditional keyword-based systems to deliver poor or irrelevant results because they rely too heavily on exact keyword matches.

LSI solves this problem by identifying patterns of word usage and co-occurrence. It allows a system to infer a query’s semantic context and detect hidden relationships between terms, even if those terms didn’t co-occur frequently in the same documents or when the precise keywords used are absent from the relevant documents.

The mathematical foundation of latent semantic indexing

At the heart of latent semantic indexing is a mathematical process called singular value decomposition (SVD). SVD breaks a large matrix into three smaller ones to reveal hidden relationships between terms and documents. In LSI, this matrix is the term-document matrix. Each row is a term, and each column is a document, with the values showing how often terms appear in those documents.

Cosine similarity plays a crucial role in comparing document representations by measuring their similarity. This measurement helps understand the distribution of terms in related texts.

How singular value decomposition works

Singular value decomposition breaks down this large matrix into three smaller matrices:

- U (terms): a matrix representing terms and their relationships to latent concepts.

- Σ (singular values): a diagonal matrix that highlights the strength of each latent concept.

- V (documents): a matrix representing documents and their relationships to the latent concepts.

Through this process, LSI converts raw term-document data into a more abstract space, allowing it to detect relationships between terms that aren’t directly connected in the original data.

Here’s an example of how this might look. Imagine we have three documents:

- Document 1: “The cat plays with yarn”

- Document 2: “The dog chases the cat”

- Document 3: “The cat sleeps on the couch”

First, we create a term-document matrix with the terms (words) as rows and the documents as columns. This matrix captures the number of times a term appears in a document.

Using singular value decomposition (SVD), the matrix is split into three smaller matrices. This can uncover hidden relationships between words and documents.

If someone searches for “yarn,” LSI can recognize that in Document 1, “yarn” and “cat” appear together. So, even if another document only mentions “cat,” LSI understands there’s a connection between the two terms.

“SVD helps LSI detect connections between words and documents, even if the exact terms don’t always match.”

It’s like finding hidden topics that link similar words and ideas across multiple documents. You can see how this could make search results more contextual and relevant.

Note that LSI was developed for documents, not the entire online content infrastructure. For documents, the LSI process enables a better understanding of related terms (like “dog” and “cat”) and how documents are tied to broader topics.

The strength of latent semantic indexing is in detecting hidden semantic relationships in data. It goes beyond simple keyword matching to uncover patterns of meaning.

LSI groups semantically similar words by analyzing how terms co-occur across many documents, even if they aren’t direct synonyms. That lets systems better understand the context of terms and retrieve documents conceptually related to a query, even without exact keyword matches.

For the sake of discussion, let’s explore an example where a search engine could use LSI and deduce that someone searching for “digital camera” might also be interested in content about “photography equipment” or “camera reviews.”

While latent semantic indexing is mathematically sound and effective in detecting patterns based on how often words appear together, it can’t truly understand the meaning behind those words. This approach relies purely on statistical co-occurrence without considering the deeper context or intent.

It’s a structured, rule-based method that classifies terms based on their presence in documents rather than their semantic significance or the actual relationships between concepts. As a result, while LSI can group related terms, it doesn’t fully grasp what they represent in different contexts.

The myth of LSI keywords in SEO

During the early 2000s, SEO professionals began to speculate that LSI keywords—terms conceptually related to primary keywords—could enhance website rankings. The belief was that search engines were using LSI or similar techniques to understand the meaning behind web content.

As a result, many SEO strategies focused on inserting related terms, assuming this would improve a page’s relevance and make it more attractive to search engine algorithms.

This approach, however, was based on a misunderstanding of how search engines operate. While semantic relationships were valuable, the belief that search engines specifically used LSI was incorrect.

Despite its initial promise and the persistence of the term “LSI keywords” in some SEO discussions, LSI and LSI keywords are not part of modern search engine algorithms and do not exist as a distinct ranking factor.

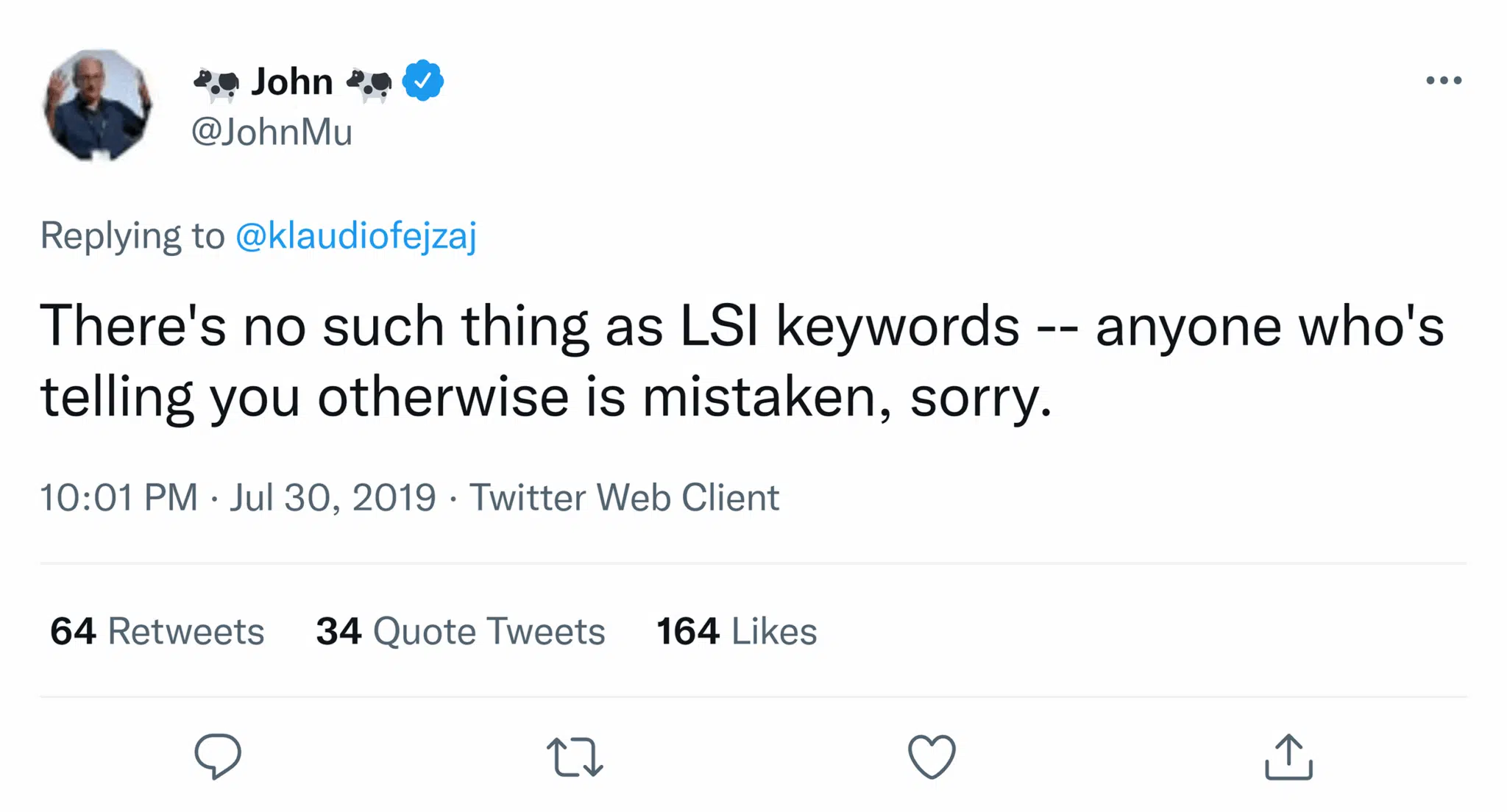

In 2019, John Mueller, a Google representative, confirmed that LSI keywords do not exist in Google’s algorithm.

If Google’s not using LSI, what is it using?

Google cares about semantics. A lot. Search engines today rely on far more advanced approaches to determine the semantic relevance of content.

Remember: LSI was developed in the 1980s—the heyday of floppy disks and pagers. Things have changed quite a bit since then.

BetterVet Grew Organic Traffic 2,000% with Semrush’s Keyword Research. Your Turn?

✓ Discover overlooked keywords with high conversion potential

✓ See search intent data for smarter content planning

✓ Identify terms your site can realistically rank for

Free instant insights.

Natural language processing

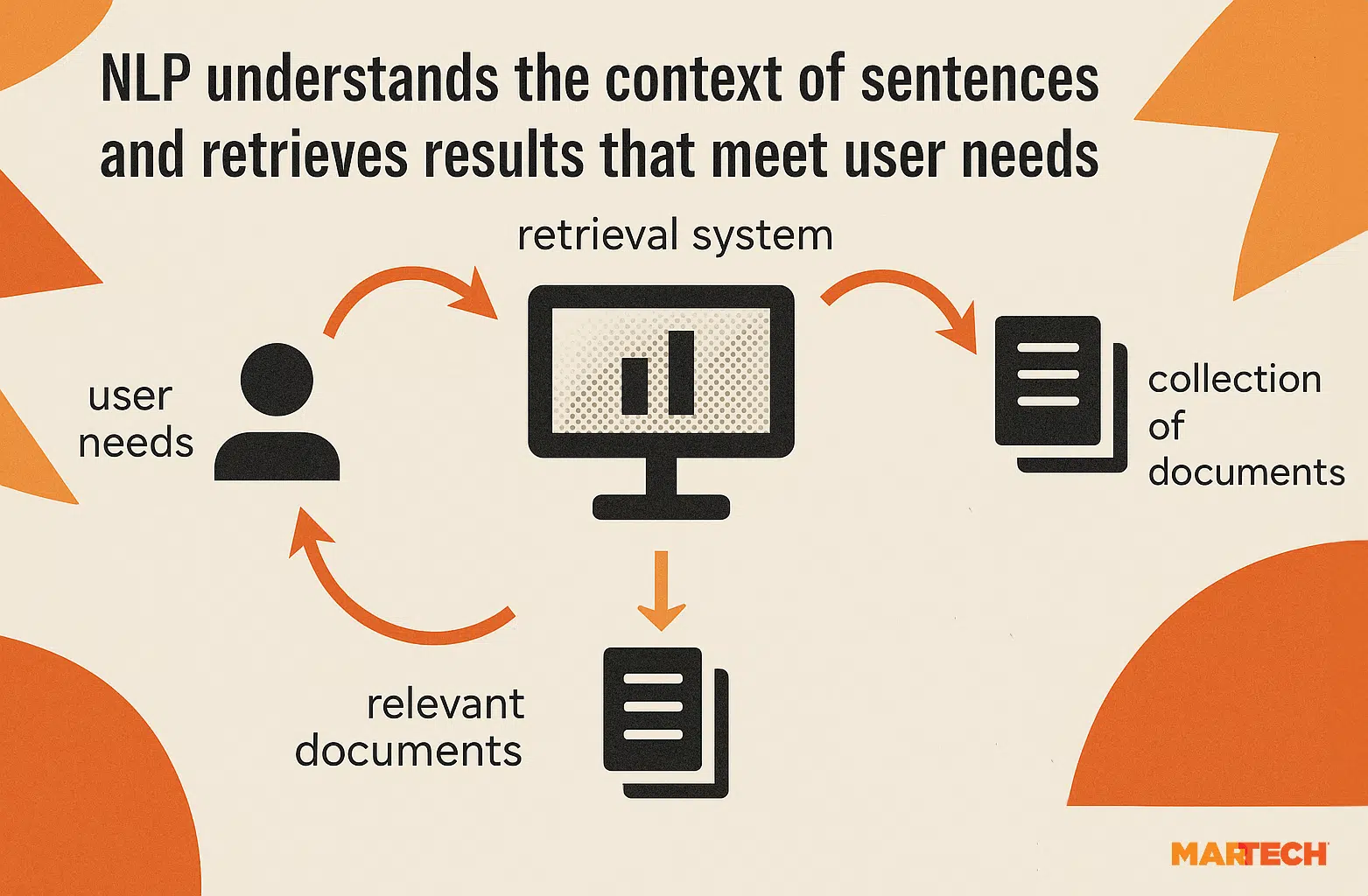

Instead of LSI, search engines use natural language processing (NLP) and machine learning models like BERT (Bidirectional Encoder Representations from Transformers). These models help search engines understand the context, relationships between concepts and user intent behind search queries.

Natural language processing is a form of AI that helps machines understand, interpret and generate human language. NLP is essential in modern search engines, allowing them to grasp the words users type and the intent and context behind them. By parsing language nuances, resolving ambiguities and interpreting user intent more precisely, NLP enhances the overall search experience.

Unlike LSI, there is evidence that Google confirms it uses NLP in its search capabilities.

Just like in Google Search, which lets you search queries in a natural, intuitive way, we want to make it easy for you to find information in the workplace using everyday language. According to Gartner research, by 2018, 30% or more of enterprise search queries will start with a “what,” “who,” “how” or “when.”

Today, we’re making it possible to use natural language processing (NLP) technology in Cloud Search so you can track down information—like documents, presentations or meeting details—fast.

Computational linguistics plays a crucial role in understanding and developing NLP technologies, providing foundational texts and resources that explore the methodologies involved.

Natural language processing goes much further than LSI. NLP doesn’t just analyze how often words appear together but also understands syntax, semantics and context.

With NLP, search engines can:

- Interpret user intent: NLP helps search engines understand what a user is asking for, even if the words they use aren’t literal or uncommon. For example, “best place for a bite to eat before a theater show” might return local restaurants to the theater even though “food” or “restaurant” isn’t mentioned.

- Resolve language ambiguities: NLP interprets words like “bank” (financial institution) and “bank” (riverbank) differently based on their surrounding words and context.

- Grasp the whole meaning of sentences: NLP can discern the complete context of sentences even if the query doesn’t use exact keywords from the indexed content.

For example, if a user searches for “how to change a car tire,” NLP allows the search engine to understand the action (changing) and the object (car tire), delivering results that explain how to do the task, not just pages with the keywords “change” and “car tire.”

The differences between NLP and LSI

There are some key differences between natural language processing and latent semantic indexing:

- LSI focuses on word relationships based on frequency and co-occurrence, while NLP understands the intent and meaning behind those words.

- LSI is limited to analyzing terms in relation to each other, while NLP uses machine learning models like BERT to grasp the deeper meaning of phrases and entire queries.

- NLP and semantic search prioritize user intent, aiming to deliver results based on what the user is actually looking for, while LSI is limited to analyzing patterns in word usage across documents.

- NLP handles language variations and ambiguity much better than LSI, which means it can offer more accurate and relevant results.

Search engines, including Google, use NLP to improve the relevance of search results. NLP analyzes the context behind queries, breaking down user language and recognizing intent. This makes it easier for search engines to deliver results that answer the query more precisely, regardless of how the query is phrased.

Empirical methods play a crucial role in analyzing and validating NLP models, ensuring that these models are effective in understanding and processing language.

For instance, when you search for “best running shoes for flat feet,” NLP interprets this as a user wanting recommendations for running shoes that suit their specific condition.

NLP powers modern search engines to provide more context-driven, relevant results by understanding both words and meaning in a query.

Knowledge graphs

Knowledge graphs are an incredibly powerful system used by Google to understand semantics. Launched in 2012 (over 20 years after LSI!), they enrich search engine results pages (SERPs) with context.

Here’s an example of knowledge graphs in action.

The keyword “mercury” could mean the planet or the chemical element. A broad search query like “mercury” doesn’t give anything away about what the user wants. The SERPs rely on knowledge graphs to enrich users’ experience.

Knowledge graphs also connect entities. Here’s another example, using the keyword “Leonardo da Vinci.”

The knowledge graph consolidates many entities, including:

- Artworks

- Education

- Birthday

- Death date

- And other related content

The Knowledge Graph surpasses older methods like LSI by structuring information around meaning rather than just word patterns.

With this technology, search engines can:

- Recognize entities: Understand that “Leonardo da Vinci” refers to a historical figure, not just a string of words.

- Disambiguate terms: Distinguish between “Mercury,” the planet, the element, or the Roman god, based on context.

- Deliver rich results: Provide instant answers, such as Leonardo da Vinci’s birth date, in side panels and carousels so searchers can find what they’re looking for.

The role of semantic search in SEO today

Semantic search has transformed how search engines understand content and deliver relevant results. Unlike early search algorithms that relied heavily on keyword matching, modern search engines, notably Google, focus on interpreting a query’s meaning, context and intent.

Information science is crucial in indexing and processing information, providing the foundation for techniques like latent semantic analysis.

This shift allows search engines to provide more accurate and aligned results with what the user is truly looking for, even if their query doesn’t contain the exact search terms in the most relevant content.

How semantic search works

Semantic search leverages advanced natural language processing, machine learning, and entity recognition to understand the relationships between words, synonyms, and related concepts.

Instead of simply counting keyword frequency, search engines now analyze:

- Context: How a term is used in relation to other terms within a document or query.

- Synonyms and variants: Understanding that different words can represent the same concept (e.g., “car” and “automobile”).

- User intent: Recognizing whether the user is looking for information, a product, or an answer to a specific question.

These capabilities allow search engines to deliver more contextually relevant results by interpreting the query and matching it to the most useful content, even if the phrasing differs from what the user typed.

In the past, search engines operated on simple keyword-based matching. For example, if someone searched for “best gaming laptops,” the search engine would return pages that contained this exact keyword phrase.

However, this often led to poor results when users didn’t know the exact terminology or websites gamed the system by overstuffing keywords into content.

Traditional topic modeling techniques often struggle with data sparsity, especially when dealing with short texts like search queries. The lack of sufficient data can hinder the effectiveness of these models in uncovering hidden themes, distinguishing it from the capabilities of more advanced technologies like BERT and neural matching.

With semantic search, search engines now understand the broader context and intent behind such queries.

For instance, a search for “best gaming laptops” today could yield results for “top-rated gaming laptops,” “gaming laptops reviews,” or even “features to consider in a gaming laptop.” The search engine understands the user is looking for information about top-performing devices, not just a page with the words “best” and “gaming laptop” repeated multiple times.

SEO today is less about stuffing content with related terms and more about ensuring that content is contextually relevant and answers the user’s intent. Semantic search lets search engines determine whether a user wants to buy a product, find information, or learn how to do something. This means that content must be optimized for specific keywords and how well it answers user needs.

By understanding user intent, search engines can filter out irrelevant results, such as pages that might use the right keywords but don’t provide the information the user seeks. Search engines analyze content holistically, considering not just keywords but how well the content covers a topic, whether it addresses related subtopics, and whether it matches the user’s underlying intent.

The goal is to provide comprehensive and useful results rather than those that merely contain the right keyword combinations.

Today, semantic search allows Google to deliver results that cover broader, related topics such as soil recommendations, watering schedules and common pests for container gardening. Even if a webpage doesn’t include the exact query phrasing, it can still rank highly if it addresses these semantically related aspects.

While using semantically related terms is still valuable for enhancing content depth and topic coverage, it’s not because of LSI. Instead, it’s due to the evolution of search algorithms that focus on understanding the meaning, context and intent behind content.

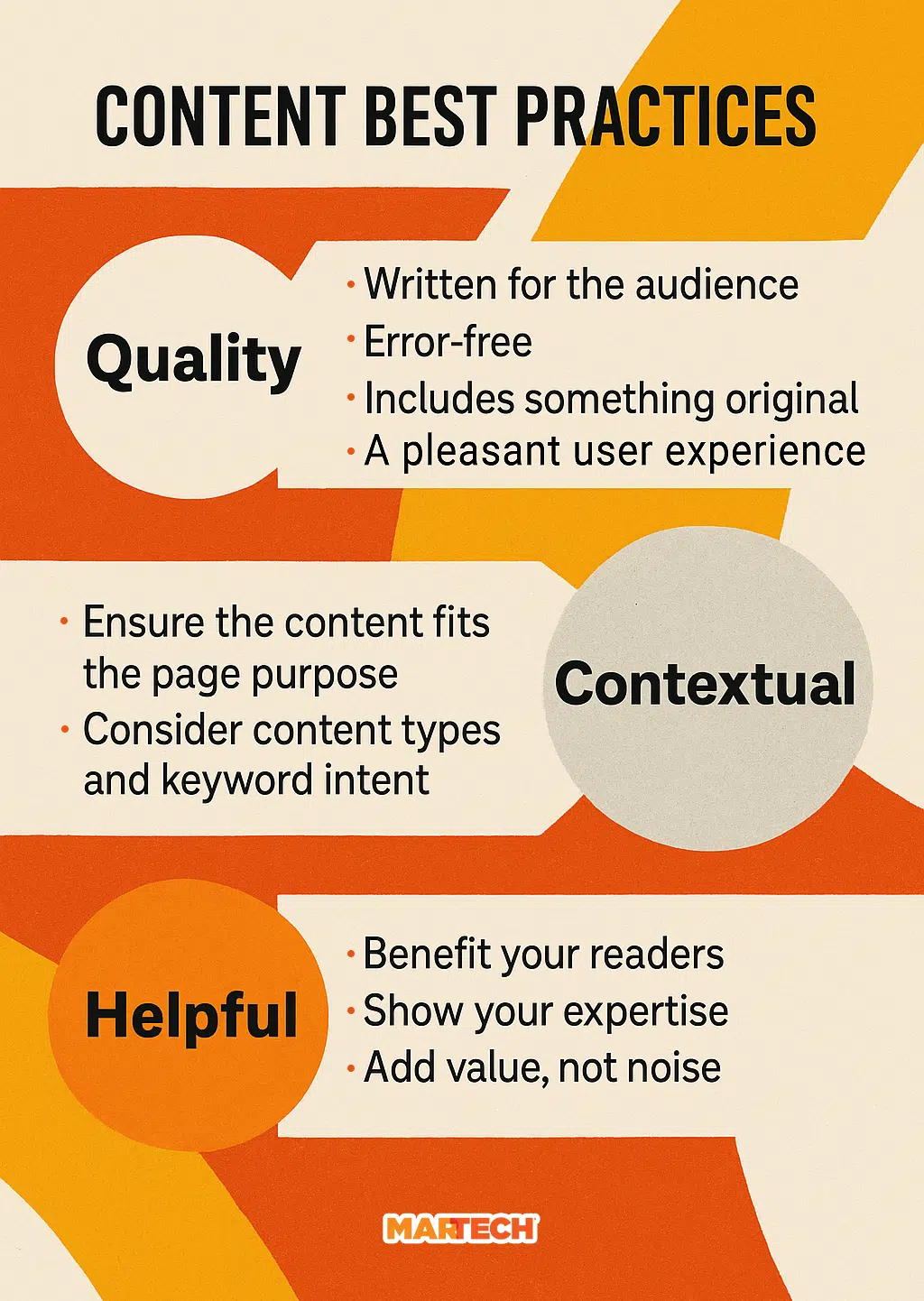

Therefore, successful SEO strategies should prioritize creating high-quality, informative content that addresses user needs comprehensively using highly valuable keywords and topic clusters.

Common mistakes to avoid when using semantic search concepts

As search engine algorithms have evolved, it’s crucial to avoid outdated practices and common pitfalls when optimizing content for semantic search.

Below are key mistakes to avoid:

1. Over-optimizing with irrelevant keywords

Many believe stuffing a page with related keywords (synonyms or similar terms) will enhance semantic search. However, keyword stuffing can harm user experience and may even result in penalties from search engines. The focus should always be on context and meaning rather than keyword frequency.

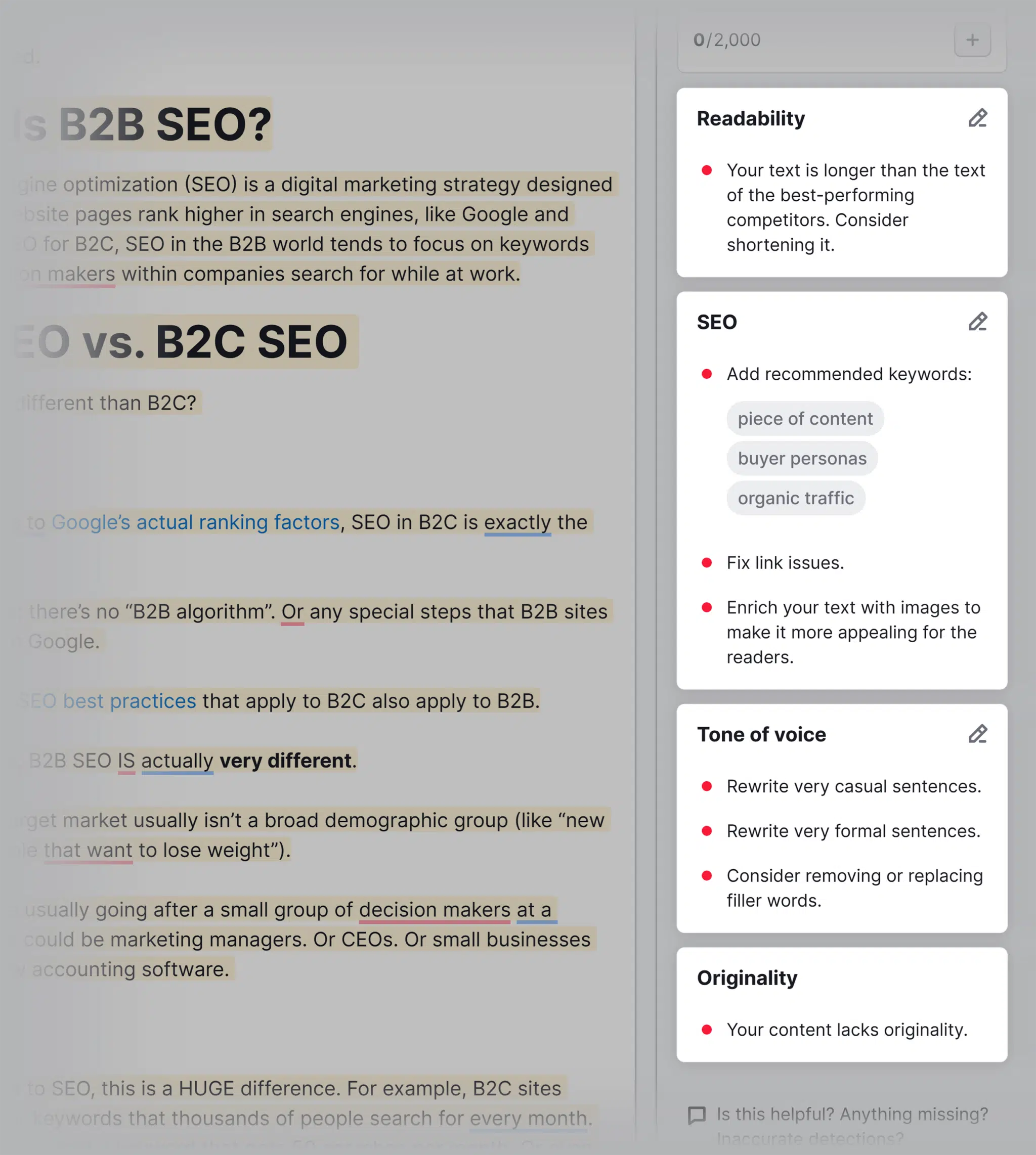

What to do instead: Write naturally about your topic. A good writer, especially a subject matter expert, will likely weave in semantic keywords that help Google contextualize a page. Meeting semantic requirements is a by-product of creating in-depth articles. Tools like the SEO Writing Assistant can help analyze semantic keywords.

2. Ignoring user intent

Semantic search prioritizes understanding the user’s intent behind the query rather than just matching keywords. Even if the right semantic keywords are used, content that doesn’t align with what users are searching for will not perform well.

What to do instead: Focus on user intent and what your website visitor needs from your content. Providing helpful content is one element of Google’s ranking system, and Google has been very public and clear about it. It wants to provide useful content that benefits people.

Google’s automated ranking systems are designed to present helpful, reliable information that’s primarily created to benefit people, not to gain search engine rankings, in the top search results.

3. Failing to address topic depth

Semantic search algorithms favor content that covers a topic in-depth and breadth. Simply scattering related terms around the content won’t be enough. Search engines assess how well you address the various aspects of a subject.

What to do instead: Write long-form articles that broadly address search intent when necessary. It’s common to skip details for fast content production, especially in this age of AI, but well-researched, accurate, helpful content gives you the best chance of performing in SERPs. You don’t need to write long articles when shorter ones are sufficient. It’s about writing an article the user needs (matching search intent, as mentioned above).

4. Focusing on keywords over topics

Many people still approach semantic search with a keyword-first mindset. Modern SEO focuses more on topics, meaning creating content that thoroughly covers a subject rather than just a list of keywords is essential.

However, utilizing a particular keyword in search queries is still essential as it helps drive relevant traffic. Google generates related keywords based on the specific keyword, enhancing context and improving search engine rankings.

What to do instead: Think about content strategy and not just singular pieces of content. Instead of writing content to cover a keyword, develop a content plan that considers a buyer’s journey and what audiences need from content on your website.

Further reading: A guide to content marketing strategy

5. Overlooking structured data

Structured data (such as schema markup) lets search engines better understand the context and relationships within your content. Many websites fail to use this tool, missing an opportunity to enhance semantic understanding.

What to do instead: Use structured data on your web pages. Visit Schema.org to find relevant schema types for your content. Schema markup gives Google extra context about your page. You can add schema to markup products, articles, events, or something else. The benefit of schema is that it can influence SERP features like review stars, events added within a SERP feature, and so much more.

How to optimize content using semantic strategies

Optimizing content for search engines goes beyond traditional keyword targeting. It requires an understanding of semantic relevance and user intent. To succeed in this environment, your content must be contextually rich, cover related subtopics, and provide answers to the actual questions your audience is asking.

One effective way to find related keywords is by using Google search suggestions. To discover related keywords, perform a Google search and look at the “People also search for” section at the bottom of the results page.

Here are a few practical strategies for optimizing your content using semantic search principles.

1. Prioritize user intent

One of the most important aspects of semantic optimization is ensuring that your content aligns with user intent. Every query has an intent, and understanding this intent helps you craft content directly addressing what users are looking for.

For example:

- Informational intent: If users search for “how to grow basil,” they likely want a detailed guide. Here, focus on step-by-step instructions, tips, and related subtopics such as “best soil for basil” and “watering schedules.”

- Transactional intent: Users who search for “buy running shoes” are likely ready to purchase. Content optimized for transactional queries should provide product comparisons, links to purchases, and customer reviews.

Action steps:

- Identify the search intent behind the keywords you target.

- Tailor your content to address that intent directly.

2. Focus on contextually relevant content

Search engines like Google look for more than simply a keyword’s frequency on a page—they seek to understand the overall meaning of your content. Therefore, your goal should be to create comprehensive, well-rounded content that thoroughly covers the topic.

This means:

- Addressing subtopics: Cover various facets of the primary subject. For example, if your article is about “best running shoes,” you might also include sections on “shoe materials,” “running surfaces,” and “foot types” to give the reader a more complete understanding.

- Answering questions: Tools like Google’s People Also Ask feature or FAQ schema can help you uncover common questions about your topic. Addressing these questions directly in your content enhances your chances of meeting the user’s specific needs and ranking higher in search results.

It’s also important to use a specific word carefully in content creation to avoid keyword overstuffing and ensure the content sounds natural.

Action steps:

- Research all aspects of the topic you’re writing about

- Answer multiple related questions within the content and provide detailed explanations

- Use subheadings, bullet points, and tables to organize content in a clear, structured manner

3. Target related concepts and synonyms

Rather than overloading your content with a single keyword, incorporate related terms, synonyms, and conceptually relevant phrases.

For example, if your main keyword is “digital marketing,” include related terms such as “online advertising,” “SEO strategies,” and “social media marketing” to signal to search engines that your content is comprehensive.

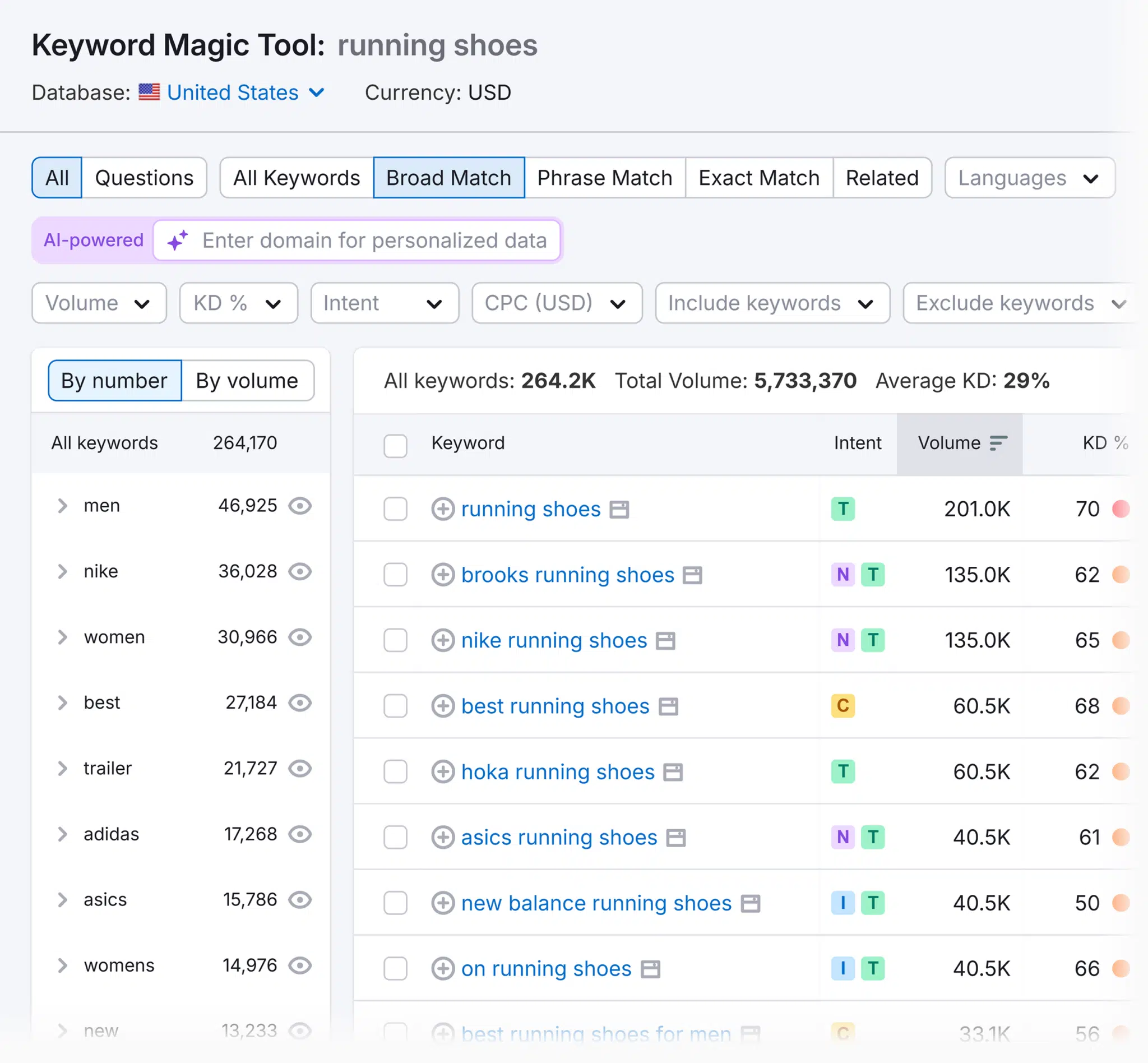

One useful tool for this is the Keyword Magic Tool, which helps you discover a broad range of related terms and phrases. This tool can generate a list of relevant keywords and topics that align with your primary focus.

These suggestions will not only increase the depth of your content but also improve its semantic relevance, helping search engines understand that your article addresses multiple aspects of the subject.

Action steps:

- Use keyword tools to find conceptually relevant keywords and related phrases.

- Integrate these naturally into your content, ensuring they contribute to its quality.

4. Utilize structured data

Structured data (like schema markup) lets search engines understand the relationships between elements in your content. It enhances search engines’ ability to interpret your content and provides opportunities for rich snippets, which can improve click-through rates.

Action steps:

- Add schema markup to your content for things like FAQs, how-to guides, reviews, and products.

- Use Google’s Structured Data Markup Helper to add the appropriate schema tags to your content.

Well-structured content that answers user queries succinctly and thoroughly has a higher chance of being featured in Google’s rich snippets, People Also Ask sections, or as part of Featured Snippets.

5. Leverage natural language processing

Search engines use NLP to understand how language works, enabling them to identify meaning and context from content. When optimizing content, it’s essential to write in a natural, conversational tone that mimics how users search for information.

Unstructured data plays a crucial role in search engine indexing and information retrieval.

Action steps:

- Write for humans first, search engines second, ensuring your content is easily understood.

- Use long-tail keywords and question-based phrases to align with how users search.

6. Build content that encourages engagement

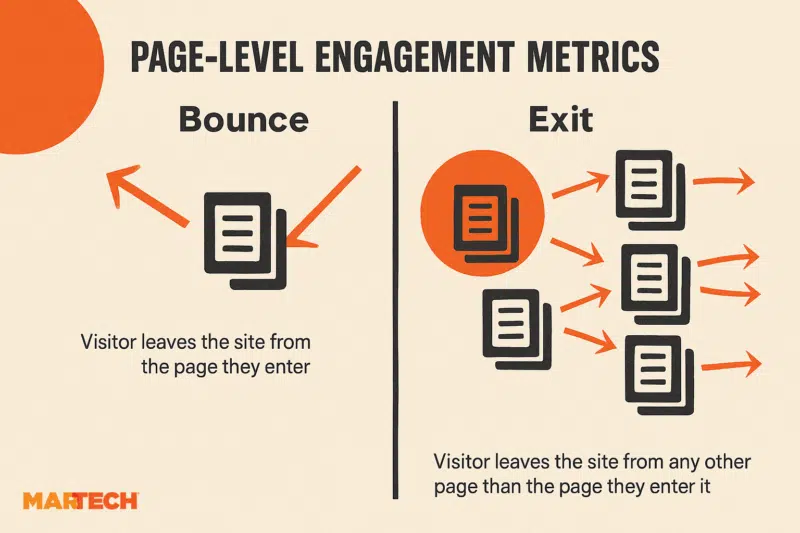

Search engines monitor how users interact with content. Pages that engage users tend to rank better. Providing valuable, engaging content that holds the user’s attention will improve metrics like time on page and bounce rate.

Action steps:

- Use visual aids (images, videos, infographics) to make content more engaging.

- Create interactive elements like quizzes, calculators, or surveys when appropriate.

- Optimize for mobile devices, ensuring a smooth user experience.

7. Use internal and external linking strategically

Internal linking establishes your website’s structure, allowing search engines to better understand your content’s relationships. External linking, on the other hand, signals trustworthiness by citing reputable sources.

Action steps:

- Link relevant internal pages to each other to create a strong, topic-based architecture.

- Include links to authoritative external sources that validate your content.

- Avoid overloading the page with links—keep it natural and focused on value.

Tools for semantic optimization

Semantic optimization requires creating comprehensive, contextually relevant content aligned with user intent. Several tools can assist in keyword research, content generation, and optimization to help ensure that your content covers the depth of a topic and satisfies semantic search principles.

Here are some of the best tools for semantic optimization:

1. Semrush SEO Writing Assistant

Semrush’s SEO Writing Assistant provides real-time suggestions to improve the quality and relevance of your content. It analyzes the semantic richness of your text and recommends related keywords and phrases to ensure that your content is well-rounded and optimized for semantic search.

Features include:

- Readability checks: Ensures your content is easily readable and understandable.

- Targeted keyword suggestions: Based on the main topic, it provides related keywords that enhance semantic relevance.

- Tone of voice: Adjusts the tone of your content to align with the audience’s expectations.

- Plagiarism checker: Ensures originality, which is crucial for SEO success.

2. Google Natural Language API

Google’s Natural Language API is a powerful tool that provides insights into the syntax and semantics of your content.

It uses machine learning models to analyze text and offers features like:

- Entity analysis: Identifies key concepts and relationships between terms, enhancing the semantic depth of your content.

- Sentiment analysis: Evaluates the tone of your content to ensure it resonates with your target audience.

- Content categorization: Automatically categorizes text into topics, which can align content with specific user queries.

3. ClearScope

ClearScope helps you create semantically optimized content by analyzing the top results for your target keyword and generating a list of related terms and topics.

Features include:

- Content grading: Provides a score for your content based on how well it covers related concepts and keywords.

- Topic coverage: Suggests additional subtopics that are related to your main keyword, ensuring that your content is comprehensive and well-rounded.

- Competitor analysis: Helps you understand the semantic strategies used by top-ranking competitors, so you can enhance your content accordingly.

4. Frase.io

Frase.io is an AI-driven content optimization tool that focuses on answering user questions and addressing user intent.

It helps create content that is both contextually relevant and comprehensive by:

- Content brief generation: Automatically generates content briefs based on search intent and top-ranking pages.

- Answer optimization: Helps structure your content to answer user questions effectively, increasing the likelihood of ranking in featured snippets.

- Topic research: Identifies related questions and topics that should be covered to make your content more in-depth and relevant to user queries.

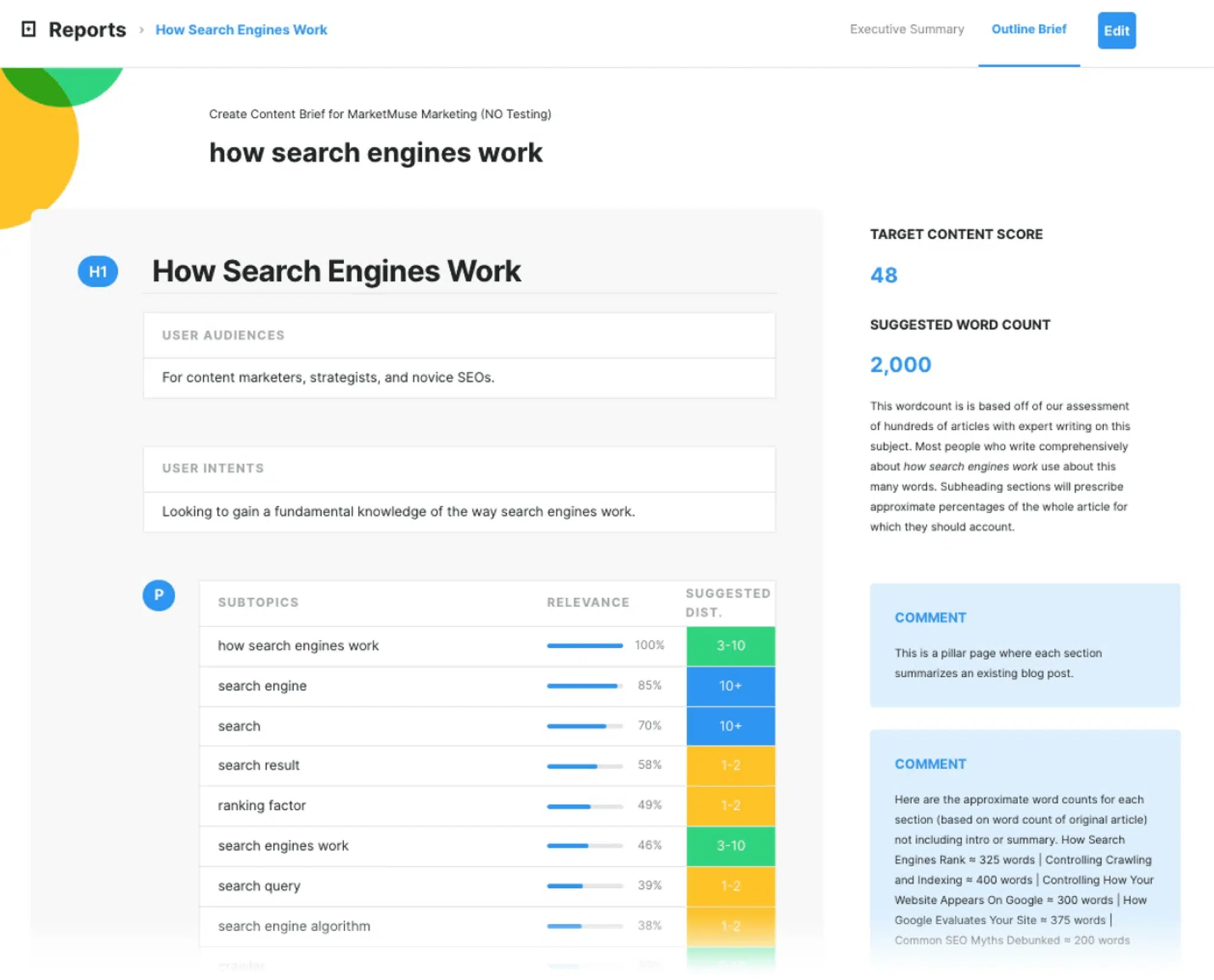

5. MarketMuse

MarketMuse is a semantic content optimization tool that uses AI to help you plan, research, and create high-quality content.

It offers:

- Content gap analysis: Identifies areas where your content may be lacking in coverage compared to top-ranking pages.

- Topic modeling: Suggests related topics and subtopics that can help improve the depth and breadth of your content.

- Optimization score: Provides real-time feedback on how well your content aligns with semantic search principles, ensuring it’s optimized for ranking.

Take action to improve your SEO strategy

If you want your SEO strategy to succeed today, focus on creating high-quality content that comprehensively answers users’ needs. Forget chasing LSI keywords—your priority should be understanding and addressing user intent while covering topics thoroughly.

Ready to create content that buyers (and Google) love? Read how to create content for every stage of the customer journey.