Facebook to use crowdsourcing, fact-checkers and labels to combat fake news

The social giant will tap users to flag potential hoaxes, and then professional fact-checkers to determine veracity.

It’s been roughly five weeks since the US presidential election and the corresponding fake news scandal. After some initial denial of the problem by Facebook CEO Mark Zuckerberg, the company (together with Google) got busy to try to cut off ad revenue for fake news sources.

Zuckerberg also signaled a user-based approach to the problem late last month in a blog post. He previewed what the site was going to do:

- Stronger detection. The most important thing we can do is improve our ability to classify misinformation. This means better technical systems to detect what people will flag as false before they do it themselves.

- Easy reporting. Making it much easier for people to report stories as fake will help us catch more misinformation faster.

- Third-party verification. There are many respected fact checking organizations and, while we have reached out to some, we plan to learn from many more.

- Warnings. We are exploring labeling stories that have been flagged as false by third parties or our community, and showing warnings when people read or share them.

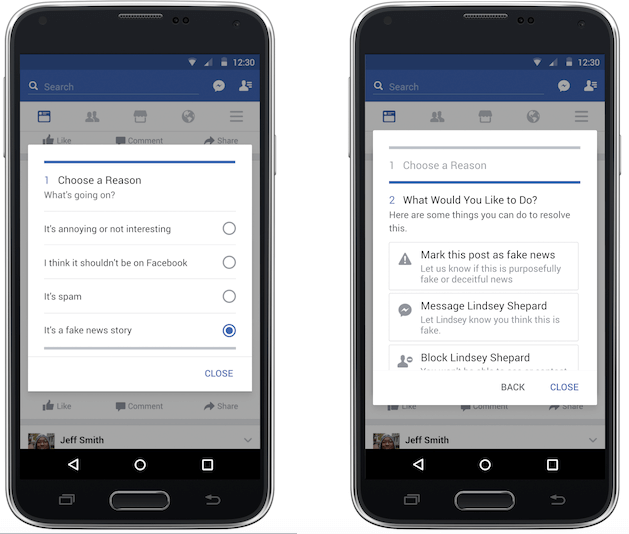

Today, the site is officially rolling out these measures. Its approach, which will evolve, relies on users to initially identify stories as fake or hoaxes and then utilizes professional third-party fact-checkers to verify their accuracy (or inaccuracy). Here’s what the experience will initially look like (images provided by Facebook):

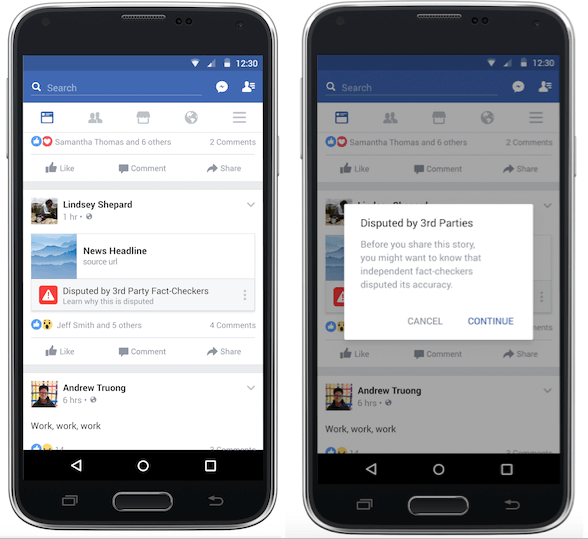

Once flagged, users can mark the specific post as “fake news.” If enough people do this to particular stories — Facebook wasn’t specific about how many people it would take — then the item will be referred to third-party fact-checking organizations:

We’ve started a program to work with third-party fact checking organizations that are part of Poynter’s International Fact Checking Network. We’ll use the reports from our community, along with other signals, to send stories to these organizations. If the fact checking organizations identify a story as fake, it will get flagged as disputed and there will be a link to the corresponding article explaining why. Stories that have been disputed will also appear lower in News Feed.

Facebook won’t remove “fake news” or prevent people from sharing it. However, users will see warnings that indicate the story has been disputed by third-party fact-checkers.

In addition, Facebook said that once a story has been flagged and disputed, it cannot be promoted. The company added that “domain spoofing,” where people are falsely led to believe a story is from a reputable news organization, will be addressed:

On the buying side we’ve eliminated the ability to spoof domains, which will reduce the prevalence of sites that pretend to be real publications. On the publisher side, we are analyzing publisher sites to detect where policy enforcement actions might be necessary — It’s important to us that the stories you see on Facebook are authentic and meaningful.

Facebook is trying to strike a balance between unencumbered sharing and the unfettered proliferation of false information, which may have impacted the presidential election outcome. It’s a laudable first step.

Contributing authors are invited to create content for MarTech and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. MarTech is owned by Semrush. Contributor was not asked to make any direct or indirect mentions of Semrush. The opinions they express are their own.

Related stories