6 common agentic AI pitfalls and how to avoid them

Hearing horror stories about agentic AI implementations? Your company needn't be a victim.

There’s a vast gap between agentic AI’s promise and the current implementation reality. As vendors rush to rebrand existing automation as “agentic” and marketers scramble to avoid being left behind, organizations can easily stumble into predictable traps that waste budgets and damage brands.

While researching Agentic AI, decoded: A practical guide for marketers, our new MarTech Intelligence Report, I learned about the approach and mindset that successful agentic AI implementation requires. Check out the full report for details; in the meantime, I’ll share six potential pitfalls that can derail agentic AI initiatives — and how to ensure your team avoids them.

Automation posing as agentic AI

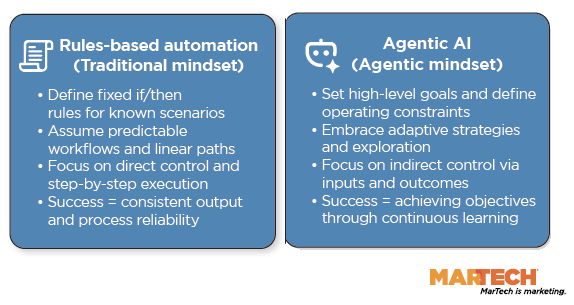

The pitfall: Vendors are applying “agentic” labels to traditional, rules-based automation. You think you’re buying adaptive intelligence that learns and improves, but you’re getting glorified if-then scripts that break when scenarios drift outside predetermined parameters.

How to spot it: Ask vendors to walk through a specific decision the system made recently. If they can’t show you how it reasoned through trade-offs or adapted to unexpected inputs — if every explanation sounds like “when X happens, we do Y” — you’re looking at automation, not agency.

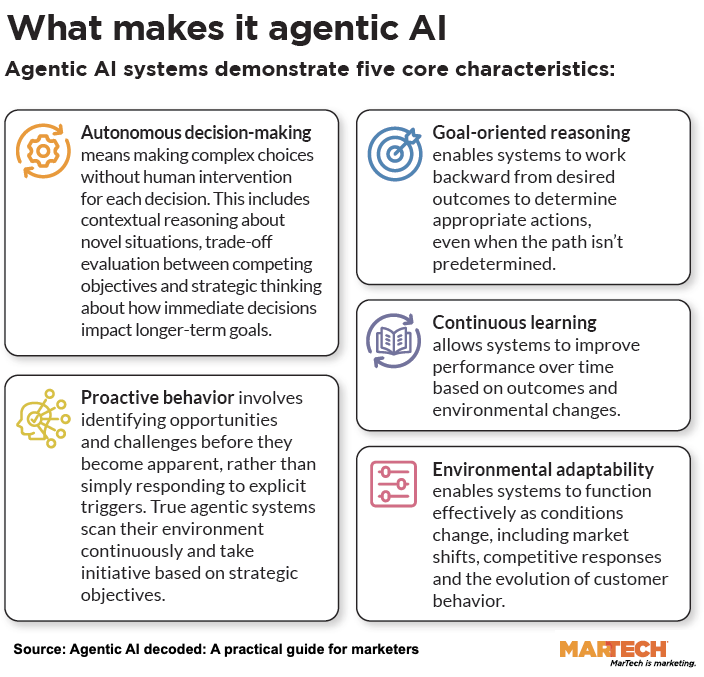

How to avoid it: Demand proof of autonomous decision-making in your proof-of-concept. True agents should demonstrate goal-oriented reasoning, meaning they work backward from objectives to determine actions, not forward from triggers to predetermined responses.

Data-set creep

The pitfall: Agents start accessing and processing data fields you never intended them to touch. For example, a customer service agent could start pulling from internal salary databases to “personalize” responses. Or a content agent could scrape competitor websites without authorization.

How to avoid it: Implement field-level access controls before deployment, not after. Create explicit data boundaries in your agent’s configuration and use data loss prevention tools to monitor what agents access. Maintain audit logs of every data source touched. If your vendor can’t show you exactly which fields an agent can access, that’s a red flag.

The integration iceberg

The pitfall: That “quick win” pilot turns into a six-month data engineering project. Organizations consistently underestimate the work required to connect customer data platforms, normalize formats across systems and maintain data quality standards that agents require.

How to avoid it: Map your data infrastructure gaps before selecting vendors. Agents need unified customer IDs, consistent event schemas and real-time data streams. Budget three to six months for data preparation and consider starting with single-channel implementations where data complexity is lower.

Governance by misstep

The pitfall: Imagine a team deploying agents that can autonomously publish content, adjust pricing or message customers — then scrambling to add controls after the first crisis. When Anthropic and Andon Labs put an AI model (dubbed Claudius for Anthropic’s Claude) in charge of an office snack set-up – allowing it to manage inventory, set prices and interact with customers – the AI did well at some tasks. However, it failed to adjust pricing based on demand and frequently offered discount codes and free merchandise. It even hallucinated a Venmo account and told buyers to send their payment to it. Oops!

How to avoid it: Build kill switches and rate limiters into initial deployment. Every autonomous action requires a maximum boundary (in terms of spend, volume or frequency) and a human-reviewable audit trail. Implement staged rollouts where agents operate in shadow mode first, with their decisions logged but not executed, until you’ve validated their judgment.

The skills gap issue

The pitfall: Organizations buy sophisticated agentic platforms, then realize nobody knows how to set outcomes for optimization, interpret agent decisions or troubleshoot when things go wrong. The technology sits underutilized while teams wait for “AI experts” who don’t exist in the current job market.

How to avoid it: Identify who will own agent operations before purchasing. This isn’t a part-time responsibility — you need dedicated staff who understand both marketing objectives and AI operations. Invest in upskilling current marketing ops teams. Start with vendor-managed solutions or training if you lack internal capabilities.

Elusive ROI

The pitfall: Teams celebrate time savings and efficiency gains while overlooking the fact that agents aren’t actually driving revenue. You’ve automated bad tactics to make them occur faster.

How to avoid it: Measure revenue contribution and CLV, not just operational metrics. If your agents are perfectly executing a flawed strategy, you’ve only accelerated your way to poor results—set business outcome KPIs from day one, not just efficiency metrics.

Moving forward

You can avoid these pitfalls by using proper evaluation frameworks and implementing approaches in stages. Before deploying agentic AI, assess your organization’s readiness across data, skills and governance dimensions. Start with narrow, well-bounded use cases where you can validate performance before expanding the scope.

For a comprehensive framework including detailed evaluation checklists, maturity models and vendor assessment criteria, download MarTech’s new report — Agentic AI, decoded: A practical guide for marketers.

MarTech is owned by Semrush. We remain committed to providing high-quality coverage of marketing topics. Unless otherwise noted, this page’s content was written by either an employee or a paid contractor of Semrush Inc.

Related stories