Free speech, Meta, data privacy and email: A delicate balance or a complete disconnect?

The growing divide in how to tackle moderation, misinformation and privacy regulation will have a profound impact on consumer trust.

Meta’s recent decision to move from fact-checkers to a community notes model akin to X, highlights a growing divide in how digital platforms handle free speech and content moderation. This underscores a deeper disconnect across digital channels. These divergent approaches to moderation, combined with escalating data privacy concerns, pose significant challenges for marketers and consumers.

At the heart of this lies a critical tension: How do we balance the principles of free speech with the need for trust and accountability? How can consumer demands for data privacy coexist with the realities of effective digital marketing? These unresolved questions underscore a rift that could fundamentally reshape the future of digital marketing and online trust.

Background: A timeline

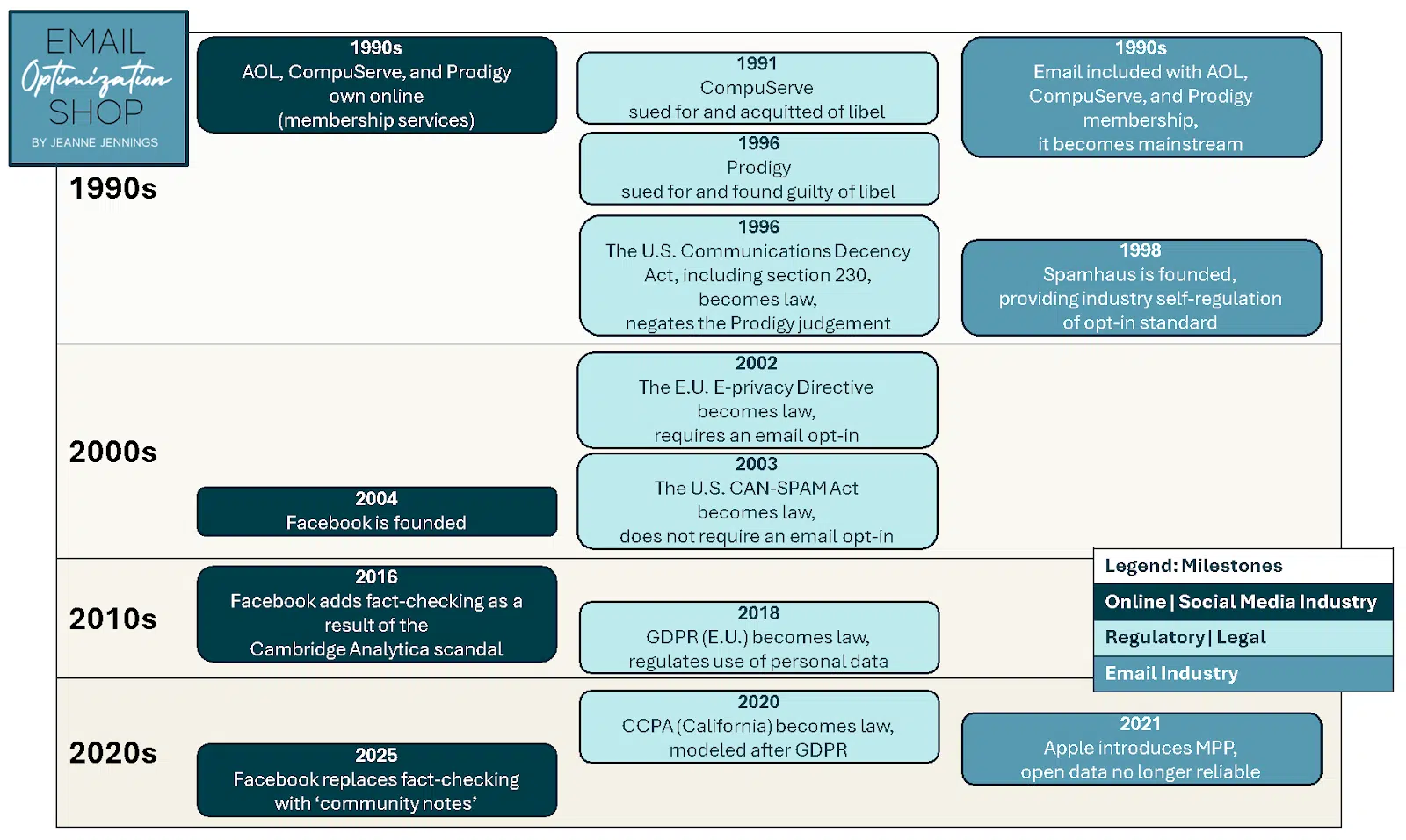

To understand the current situation, it’s helpful to review how we got here. Below is a timeline of milestones across social media, email marketing and regulatory/legal/restrictive events since the 1990s.

CompuServe, Prodigy, Meta and Section 230

In the early 1990s, online platforms like CompuServe and Prodigy faced critical legal challenges over user-generated content. CompuServe was acquitted of libel in 1991 on the grounds that it acted as a neutral distributor of content, much like a soapbox in a public square. Prodigy, however, was found liable in 1996 because it proactively moderated content, positioning itself more like a publisher.

To address these contradictory rulings and preserve internet innovation, the U.S. government passed the Communications Decency Act of 1996, including Section 230, which shields platforms from liability for user-generated content. This allows platforms like Facebook (founded in 2004) to thrive without fear of being treated as publishers.

Fast-forward to 2016, when Facebook faced public scrutiny over its role in the Cambridge Analytica scandal. At the time, CEO Mark Zuckerberg acknowledged the platform’s responsibility and introduced fact-checking to combat misinformation.

Yet in 2025, Meta’s new policy shifts responsibility for content moderation back to users, citing Section 230 protections.

Email marketing, blocklists and self-regulation

Email marketing, one of the earliest digital channels, took a different path. By the late 1990s, spam threatened to overwhelm inboxes, prompting the creation of blocklists like Spamhaus (1998). This allowed the industry to self-regulate effectively, preserving email as a viable marketing channel.

The CAN-SPAM Act of 2003 set baseline standards for commercial email, such as requiring unsubscribe options. Still, it fell short of the proactive opt-in requirements mandated by the EU’s 2002 e-privacy directive and the U.S. blocklist providers. Email marketers largely embraced opt-in standards to build trust and protect the channel’s integrity, and the industry continued to rely on blocklists in 2025.

GDPR, CCPA, Apple MPP and consumer privacy

Rising consumer awareness about data privacy led to landmark regulations like the EU’s General Data Protection Regulation (GDPR) in 2018 and California’s Consumer Privacy Act (CCPA) in 2020. These laws gave consumers greater control over their personal data, including the right to know what data is collected, how it is used, have it deleted and opt-out of its sale.

While GDPR requires explicit consent before data collection, CCPA offers fewer restrictions but emphasizes transparency. These regulations posed challenges for marketers reliant on personalized targeting, but the industry is adapting. Social platforms, however, continue to rely on implicit consent and broad data policies, creating inconsistencies in the user experience.

Then in 2021, Apple introduced mail privacy protection (MPP), which made email open rate data unreliable.

Dig deeper: U.S. state data privacy laws: What you need to know

Considerations

Consumer concerns and trade-offs

As consumers increasingly demand control over their data, they are often unaware of the trade-off: less data means less personalized and less relevant marketing. This paradox leaves marketers in a challenging position, balancing privacy with effective outreach.

The value of moderation: Lessons from email marketing and other social media platforms

Without blocklists like Spamhaus, email would have devolved into a cesspool of spam and scams, rendering the channel unusable. Social media platforms face a similar dilemma. Fact-checking, while imperfect, is critical for maintaining trust and usability, especially in an era where misinformation erodes public confidence in institutions.

Likewise, platforms like TikTok and Pinterest seem to avoid these controversies over moderation. Are they less politically charged, or have they developed more effective fact-checking strategies? Their approaches offer potential lessons for Meta and others.

Technology as a solution, not an obstacle

Meta’s concerns about false positives in fact-checking mirror challenges email marketers faced in the past. AI and machine learning advancements have significantly improved email spam filtering, reducing errors and preserving trust. Social platforms could adopt similar technologies to enhance content moderation rather than retreating from the responsibility.

Dig deeper: Marketers, it’s time to walk the walk on responsible media

The bigger picture: What’s at stake?

Imagine a social media platform overwhelmed by misinformation due to inadequate moderation, combined with irrelevant marketing messages stemming from limited data caused by strict privacy policies. Is this the kind of place where you’d choose to spend your time online?

Misinformation and privacy concerns raise critical questions about the future of social media platforms. Will they lose user trust, as X did after its rollback of content moderation? Will platforms that moderate only the most egregious misinformation become echo chambers of unverified content? Will lack of relevance have a negative impact on digital marketing quality and revenue on these platforms?

Fixing the disconnect

Here are some actionable steps that can help reconcile these competing priorities and ensure a more cohesive digital ecosystem:

- Unified standards across channels: Establish baseline privacy and content moderation standards across digital marketing channels.

- Proactive consumer education: Educate users about how data and content are managed across platforms and on the pros and cons of strict data privacy requirements. Give consumers the information and more than all-or-nothing options on data privacy.

- Use AI for moderation: Invest in technology to enhance accuracy and reduce errors in content moderation.

- Encourage global regulatory alignment: Preemptively align with stricter privacy laws like GDPR to future-proof operations. The U.S. Congress has not succeeded in this, even as the states are passing laws on these issues.

To ensure the future of social digital spaces, we must address the challenges of free speech and data privacy. This requires collaboration and innovation within the industry to build trust with users and continue to provide a positive online experience in all channels.

Dig deeper: How to balance ROAS, brand safety and suitability in social media advertising

Contributing authors are invited to create content for MarTech and are chosen for their expertise and contribution to the martech community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. MarTech is owned by Semrush. Contributor was not asked to make any direct or indirect mentions of Semrush. The opinions they express are their own.

Related stories