Invisible pages, lost revenue: Why crawlability poses a huge risk

Are you wasting crawl budget on the wrong pages? Learn how to protect revenue with smarter crawl strategies.

While online discussion obsesses over whether ChatGPT spells the end of Google, websites are losing revenue from a far more real and immediate problem: some of their most valuable pages are invisible to the systems that matter.

Because while the bots have changed, the game hasn’t. Your website content needs to be crawlable.

Between May 2024 and May 2025, AI crawler traffic surged by 96%, with GPTBot’s share jumping from 5% to 30%. But this growth isn’t replacing traditional search traffic.

Semrush’s analysis of 260 billion rows of clickstream data showed that people who start using ChatGPT maintain their Google search habits. They’re not switching; they’re expanding.

This means enterprise sites need to satisfy both traditional crawlers and AI systems, while maintaining the same crawl budget they had before.

The dilemma: Crawl volume vs. revenue impact

Many companies get crawlability wrong due focusing on what we can easily measure (total pages crawled) rather than what actually drives revenue (which pages get crawled).

When Cloudflare analyzed AI crawler behavior, they discovered a troubling inefficiency. For example, for every visitor Anthropic’s Claude refers back to websites, ClaudeBot crawls tens of thousands of pages. This unbalanced crawl-to-referral ratio reveals a fundamental asymmetry of modern search: massive consumption, minimal traffic return.

That’s why it’s imperative for crawl budgets to be effectively directed towards your most valuable pages. In many cases, the problem isn’t about having too many pages. It’s about the wrong pages consuming your crawl budget.

The PAVE framework: Prioritizing for revenue

The PAVE framework helps manage crawlability across both search channels. It offers four dimensions that determine whether a page deserves crawl budget:

- P – Potential: Does this page have realistic ranking or referral potential? Not all pages should be crawled. If a page isn’t conversion-optimized, provides thin content, or has minimal ranking potential, you’re wasting crawl budget that could go to value-generating pages.

- A – Authority: The markers are familiar for Google, but as shown in Semrush Enterprise’s AI Visibility Index, if your content lacks sufficient authority signals – like clear E-E-A-T, domain credibility – AI bots will also skip it.

- V – Value: How much unique, synthesizable information exists per crawl request? Pages requiring JavaScript rendering take 9x longer to crawl than static HTML. And remember: JavaScript is also skipped by AI crawlers.

- E – Evolution: How often does this page change in meaningful ways? Crawl demand increases for pages that update frequently with valuable content. Static pages get deprioritized automatically.

Server-side rendering is a revenue multiplier

JavaScript-heavy sites are paying a 9x rendering tax on their crawl budget in Google. And most AI crawlers don’t execute JavaScript. They grab raw HTML and move on.

If you’re relying on client-side rendering (CSR), where content assembles in the browser after JavaScript runs, you’re hurting your crawl budget.

Server-side rendering (SSR) flips the equation entirely.

With SSR, your web server pre-builds the full HTML before sending it to browsers or bots. No JavaScript execution needed to access main content. The bot gets needed in the first request. Product names, pricing, and descriptions are all immediately visible and indexable.

But here’s where SSR becomes a true revenue multiplier: this added speed doesn’t just help bots, but also dramatically improves conversion rates.

Deloitte’s analysis with Google found that a mere 0.1 second improvement in mobile load time drives:

- 8.4% increase in retail conversions

- 10.1% increase in travel conversions

- 9.2% increase in average order value for retail

SSR makes pages load faster for users and bots because the server does the heavy lifting once, then serves the pre-rendered result to everyone. No redundant client-side processing. No JavaScript execution delays. Just fast, crawlable, convertible pages.

For enterprise sites with millions of pages, SSR might be a key factor in whether bots and users actually see – and convert on – your highest-value content.

The disconnected data gap

Many businesses are flying blind due to disconnected data.

- Crawl logs live in one system.

- Your SEO rank tracking lives in another.

- Your AI search monitoring in a third.

This makes it nearly impossible to definitively answer the question: “Which crawl issues are costing us revenue right now?”

This fragmentation creates a compounding cost of making decisions without complete information. Every day you operate with siloed data, you risk optimizing for the wrong priorities.

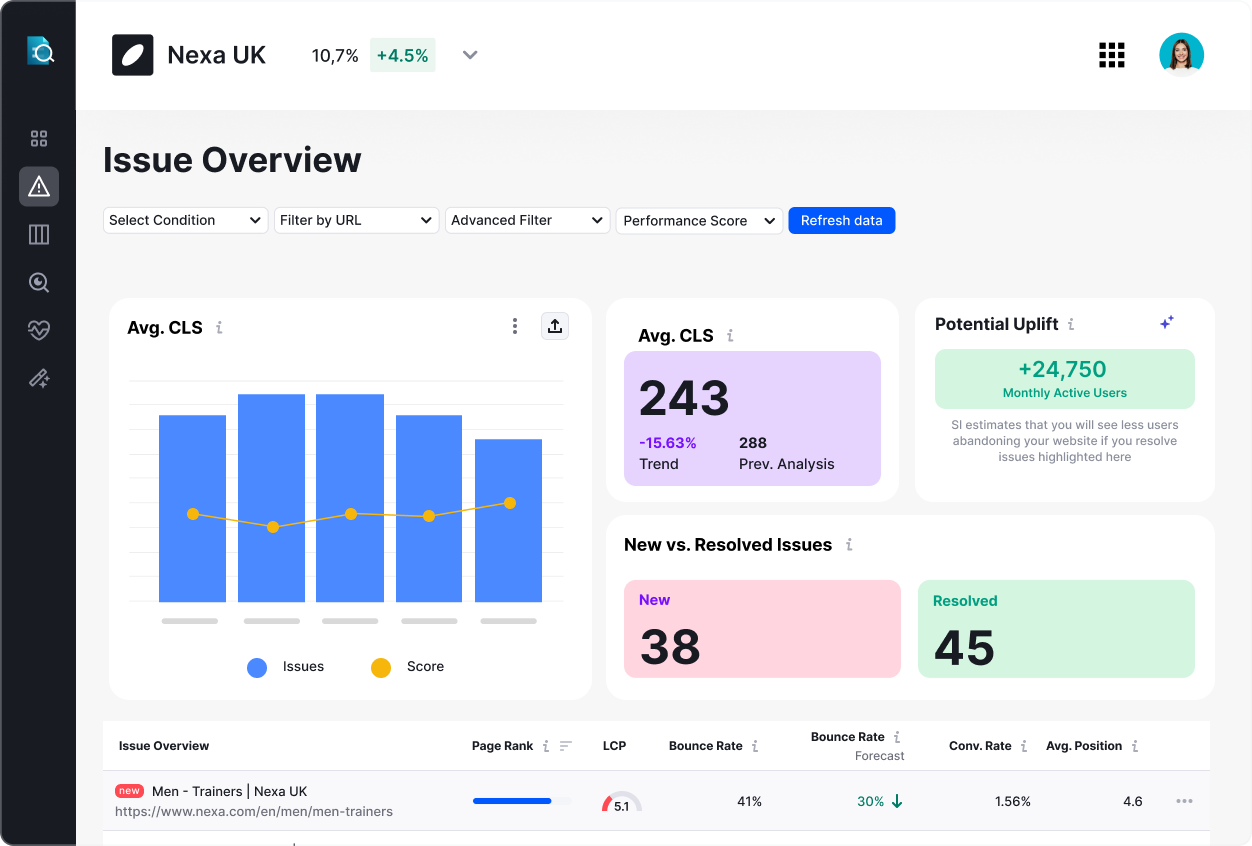

The businesses that solve crawlability and manage their site health at scale don’t just collect more data. They unify crawl intelligence with search performance data to create a complete picture.

When teams can segment crawl data by business units, compare pre- and post-deployment performance side-by-side, and correlate crawl health with actual search visibility, you transform crawl budget from a technical mystery into a strategic lever.

3 immediate actions to protect revenue

1. Conduct a crawl audit using the PAVE framework

Use Google Search Console’s Crawl Stats report alongside log file analysis to identify which URLs consume the most crawl budget. But here’s where most enterprises hit a wall: Google Search Console wasn’t built for complex, multi-regional sites with millions of pages.

This is where scalable site health management becomes critical. Global teams need the ability to segment crawl data by regions, product lines, or languages to see exactly which parts of your website are burning budget instead of pushing conversions. Precision segmentation capabilities that Semrush Enterprise’s Site Intelligence enables.

Once you have an overview, apply the PAVE framework: if a page scores low on all four dimensions, consider blocking it from crawls or consolidating it with other content.

Focused optimization via improving internal linking, fixing page depth issues, and updating sitemaps to include only indexable URLs can also yield huge dividends.

2. Implement continuous monitoring, not periodic audits

Most businesses conduct quarterly or annual audits, taking a snapshot in time and calling it a day.

But crawl budget and wider site health problems don’t wait for your audit schedule. A deployment on Tuesday can silently leave key pages invisible on Wednesday, and you won’t discover it until your next review. After weeks of revenue loss.

The solution is implementing monitoring that catches issues before they compound. When you can align audits with deployments, track your site historically, and compare releases or environments side-by-side, you move from reactive fire drills into a proactive revenue protection system.

3. Systematically build your AI authority

AI search operates in stages. When users research general topics (“best waterproof hiking boots”), AI synthesizes from review sites and comparison content. But when users investigate specific brands or products (“are Salomon X Ultra waterproof, and how much do they cost?”) AI shifts its research approach entirely.

Your official website becomes the primary source. This is the authority game, and most enterprises are losing it by neglecting their foundational information architecture.

Here’s a quick checklist:

- Ensure your product descriptions are factual, comprehensive, and ungated (no JavaScript-heavy content)

- Clearly state vital information like pricing in static HTML

- Use structured data markup for technical specifications

- Add feature comparisons to your domain, don’t rely on third-party sites

Visibility is profitability

Your crawl budget problem is really a revenue recognition problem disguised as a technical issue.

Every day that high-value pages are invisible is a day of lost competitive positioning, missed conversions, and compounding revenue loss.

With search crawler traffic surging, and ChatGPT now reporting over 700 million daily users, the stakes have never been higher.

The winners won’t be those with the most pages or the most sophisticated content, but those who optimize site health so bots reach their highest-value pages first.For enterprises managing millions of pages across multiple regions, consider how unified crawl intelligence—combining deep crawl data with search performance metrics—can transform your site health management from a technical headache into a revenue protection system. Learn more about Site Intelligence by Semrush Enterprise.

Opinions expressed in this article are those of the sponsor. MarTech neither confirms nor disputes any of the conclusions presented above.

Related stories

New on MarTech