How to see inside the AI funnel

Learn how AI tracking tools combine synthetic and real-world data to restore visibility into the AI marketing funnel and enable actionable strategy.

For two decades, marketing was a game of visibility and performance metrics. We built funnels, tracked clicks and optimized journeys because we could see what was happening. We optimized for the “messy middle”: customer journeys we can track on the open web. The premise that we can see it all, track it all is obsolete. The customer journey has migrated to closed AI environments, leaving our analytics stacks blind. The causal link between action and result has evaporated. Welcome to the age of inference, where being included in a model’s reasoning matters almost more than being clicked by a human.

The end of measurement as we know it

This shift doesn’t just break our attribution models; it breaks marketing as a measurable discipline. The dashboards and metrics we relied on feel like comfort metrics providing an illusion of control. When an AI assistant recommends a product based on Reddit sentiment, embedded documentatio or the semantic context of a YouTube review, your analytics capture nothing of the journey. The old funnel is dead, and marketers who fail to adapt will be trying to optimize a ghost. You see the final conversion but lose the story behind it, rendering your diagnostic power useless.

In response, a new market of AI tracking tools has emerged, promising to restore visibility. You most likely heard of industry darlings like Semrush’s Enterprise AIO and newcomers like Brandlight or Quilt. Understanding your brand’s performance inside Large Language Models (LLMs) requires reconciling two different kinds of data: synthetic and field data.

Synthetic “lab” data

This is data you create by testing curated prompts in LLMs by hand or via platforms like Semrush’s Enterprise AIO. By feeding the LLM a curated set of prompts, you can benchmark performance, spot errors and see how different models respond to specific queries. It reveals the theoretical limits of your brand’s presence in AI-generated answers. This approach shows what is possible under ideal test conditions.

However, this lab-grown data doesn’t reflect the messy, contextual, and memory-driven nature of real-world user interactions.

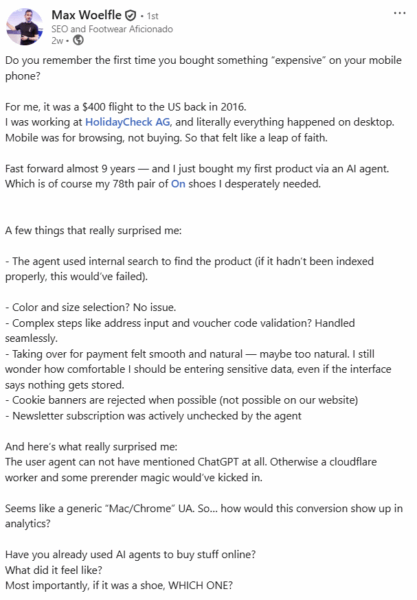

Tools that are exclusively prompt-based test isolated prompts such as “What is the best HR software for SMBs in Canada?” They log the results, offering a snapshot of brand presence. This approach is incomplete because it provides results that have little connection to real-world usage. Case in point: humans are now starting to rely on agentic AI to make online purchases.

To fill the visibility gap, some vendors offer advanced simulations. One method is system saturation, which is like a brute-force audit of the AI. It analyzes millions of responses to map your brand’s entire potential footprint. The other is user simulation, which invents thousands of fake customer “personas” to stress-test how the AI handles different types of queries. The takeaway is this: these are lab experiments. They are valuable for your product and technical teams to find and fix flaws. Industry authorities like Jamie Indigo acknowledge the value of this approach as it helps expose clarity gaps and revealing edge behaviors. Others, like Chris Green, a veteran Fortune 500 SEO strategist, underscore its arbitrary nature, pointing out that they do not reflect actual customer behavior and cannot be used to predict business outcomes like sales or campaign ROI. Relying on simulated data for strategic decisions can often turn out to be a critical mistake. You need to combine it with input from real customers and users.

Observational “field” data, a.k.a., clickstream

This is clickstream data from real, anonymous users. It records genuine user actions, showing which pages are seen, clicked on, or ignored. Most AI visibility tools integrate a mix of synthetic data and clickstream data because it unites an ideal scenario with what is actually happening. The integrity of any AI analytics tool is only as strong as its underlying clickstream data panel. Favor tools and platforms that are transparent about their clickstream data. Very often, you will see Datos and Similarweb pop up as a source of clickstream data. Datos is a Semrush company helping to power AIO. It offers tens of millions of anonymized user records across 185 countries and every relevant device class. This data ensures you are anchoring market decisions in a way synthetic personas or millions of curated brand prompts cannot.

You should ask vendors about the scale, validation methods and bot exclusion practices of their clickstream data source. Any hesitation or opacity should trigger a deeper probing of what data is being used. Your goal is to find a platform that anchors your strategic decisions in what is real, not just what is possible in a simulation. Modern digital marketing requires mapping possibilities against profitability.

Calibrating the map of what’s possible vs what is profitable

Lab data alone is an idealized map of possibilities. Field data by itself is a rearview mirror, showing what happened without explaining why. Actionable strategy is forged in the gap between them. The core task for modern marketers is to compare the two data streams continuously. Use lab data to map what is possible in a controlled environment. Use field data, the clickstream data provided to you, to validate what is real and profitable. The “messy middle” has not disappeared; it has become a dynamic feedback loop. When evaluating any LLM visibility tool, the central question is how it integrates these two data streams. The quality of any analytics platform is determined by the scale and integrity of its underlying clickstream data panel and your ability to calibrate the prompts you want to track.

Opinions expressed in this article are those of the sponsor. MarTech neither confirms nor disputes any of the conclusions presented above.

Related stories

New on MarTech