Facebook’s humanless sifting of trending news suggests some AI needs supervision

As AI suits up for self-driving marketing platforms, the Facebook experience may outline some boundaries.

Artificial intelligence (AI) is powering marketing tools at various levels, with some products and many predictions emerging that forecast self-managed platforms.

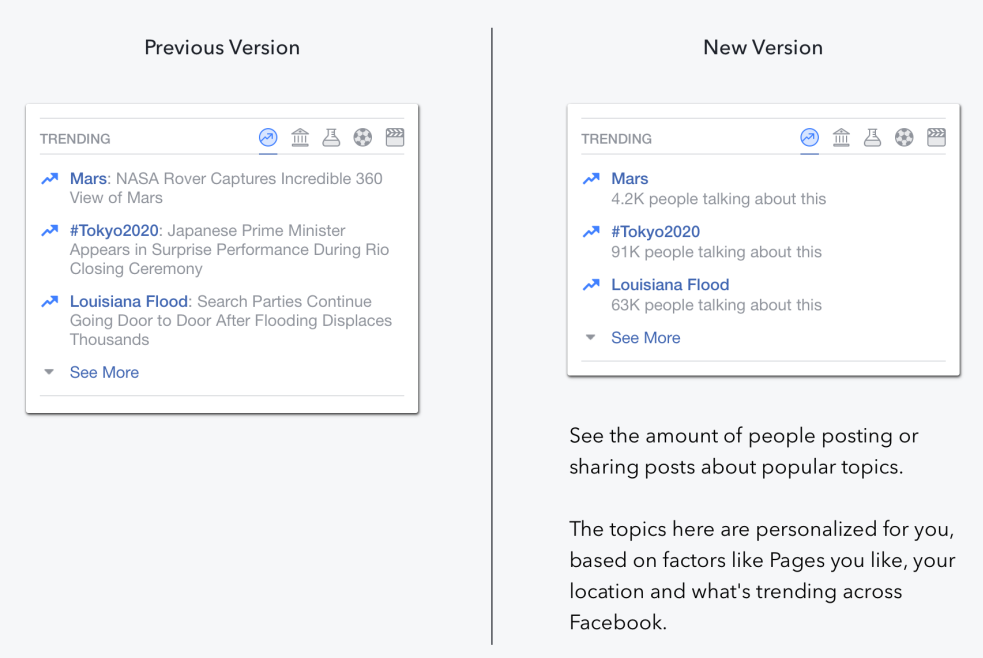

But Facebook’s removal last month of human editors from its Trending Topics team, and the resulting errors in judgment by its AI-powered algorithm, raise questions if AI — at least for the foreseeable future — can be left alone without human supervision. Among other tasks, the editors had created short descriptions that accompanied the topics.

In particular, there may be some kinds of content and contexts — such as the combo of news and Facebook — where human sensibility is still required.

The human editing process had come under fire from conservatives following a story in Gizmodo about whether conservative-oriented viewpoints were neglected. Facebook conducted an internal review that found no evidence of bias, but decided anyway to remove the humans from the process. Human engineers still remain in the unit, but to tweak the algorithm, not to oversee the choices.

Although Facebook contended that Trending Topics were always surfaced by an algorithm, the team of editors had been charged with making sure the results were what the company called “high quality and useful,” as well as linking the topics to relevant news stories. The algorithm is optimized for stories that demonstrate a large volume of mentions on Facebook, as well as for peaks of mentions in a short period of time.

The human editors were removed on Friday, August 26, and issues with story choices emerged soon after. Over the weekend, two inappropriate stories emerged into Trending Topics. One was about a Saturday Night Live star’s obscenity-enhanced roast of rightwing pundit Ann Coulter, and another was a story about a video of a man getting intimate with a McDonald’s sandwich.

And, on that Monday, there was a fake story: “Fox News Exposes Traitor Megyn Kelly, Kicks Her Out for Backing Hillary.” According to the Washington Post, the fake article remained for several hours as Facebook’s top Megyn Kelly story.

‘Not surrender completely’

The intention of Trending Topics, Facebook says, is to “surface the major conversations happening on Facebook,” including news events, but without the “suppression of political perspectives.”

In fact, Titus Capilnean, Growth Manager at AI-powered customer service provider DigitalGenius told me, intention is one of at least two key human traits that AI software still needs from us carbon-based mortal units. Those two traits, he said, show why Facebook “should not surrender completely to an algorithm.”

Until we reach the point when computing systems become conscious, an intelligent system’s intention needs to be defined by humans. We still determine if a system is intended to increase sales, suggest a movie like the one we’ve just rented, or find news stories that are generating conversations.

The other human-specific trait, Capilnean said, is empathy. Algorithms have begun to detect emotions, but empathy involves one human being’s innate sense of another’s feelings.

Perhaps more comprehensive data and better training could helped the Facebook algorithm recognize that the Megyn Kelly story was bogus. But empathy might be the determining factor in deciding if, say, a story about a hundred people dying in a hurricane in the Philippines deserves a higher ranking in the news than Justin Bieber’s latest breakup with his girlfriend. Otherwise, Facebook is content to let click popularity do that.

Although Facebook co-founder and CEO Mark Zuckerberg has said his company is a technology platform and not a publisher, a study this year by the Pew Research Center found that an astounding 44 percent of U.S. adults get their news at least in part on Facebook. Whether the company wants to or not, it is a publisher.

So, assessing story importance and credibility is essential to any organization providing a filter for news. The question is whether the algorithm can develop that kind of sophisticated judgement.

Point of view

This kind of understanding, Capilnean said, is not going to show up in AI “anytime soon.”

“It needs guidance from a human editor,” he said. “AI shouldn’t be left alone.”

Although the removal of human editors was intended to remove any semblance of bias, the algorithm’s problems thus far with making news assessments is not that it is not objective enough. The problem, in part, is that it doesn’t seem to have a sufficiently realized point of view.

If you read the New York Times, you implicitly subscribe to the Times’ view of the world. You trust its perspective for making value judgments, not its neutrality.

That’s especially true for news, and, according to AI/marketing firm Boomtrain CTO Chris Monberg, it pertains as well to other kinds of business.

“All AI needs to be opinionated to meet business obligations,” he said. For example, the software might need to offer its “opinions” about who might make a good future customer and which marketing efforts would best reach them.

Facebook’s algorithm for news, like Boomtrain’s for marketing tech, needs an opinionated view of its task. It needs a way of determining validity and value, according to some standard.

Monberg told me you could create “an approximate taxonomy” of values that is comprehensive enough to know whether a story is appropriate or not.

Under construction

As an example, he said, you could introduce things like “shaming,” similar to a child’s sense of shame after being scolded for using a bad word. It’s the same kind of training that Microsoft could have used, he pointed out, to prevent Microsoft’s chatbot Tay from being goaded by users into becoming a racist.

Tay would have had “opinions” that place values on permissible conversations, just as a news algorithm might be able to determine when a story about a sensitive subject has value, or if it seemed credible.

But those are tricky choices, Monberg said, and AI is not yet able to match the integrity and judgment of a human news editor.

Although Facebook could introduce “more sophisticated framing models” than what it’s now using, he said, we’re still a long ways from emulating a human editor.

AI may be ready for prime time to make its own decisions about how to best sell socks to the most receptive online customers, but its news judgment appears to be still under construction.

Opinions expressed in this article are those of the guest author and not necessarily MarTech. Staff authors are listed here.

Related stories

New on MarTech