The future of paid search buying

Columnist Andrew Ruegger believes that programmatic could be key to the future of paid search.

Technically savvy search marketers can be innovative with campaigns above and beyond what bid management platforms (BMPs) provide. They can expertly work in between the BMPs and UIs, using advanced practices such as AdWords scripting. This is great, and we may see more of this in the future.

However, the most sophisticated buying is done in a completely algorithmic fashion using in-memory computing systems with active API data transfer. The future of paid search marketing superiority will be determined by the amount of information used to model decision-making scenarios and the ability to purchase and adjust bids in an entirely programmatic way.

Yes, you read that correctly: in an entirely programmatic way. You’re probably thinking “no way,” and you’re not alone. The overwhelming feedback from search marketers I speak with is, “No, that will never happen. Unlike programmatic display, search has a way more complicated auction with too many factors that computer programs can’t properly deal with.”

Although search is more complex than programmatic display in some ways, the search buying process follows rules — everything from selecting keywords to formatting accounts to the ad copy you write. The remainder of this article will explain what it takes to execute search in an entirely algorithmic fashion, what the major barriers are, and what that world will look like for a search marketer.

The future of SEM

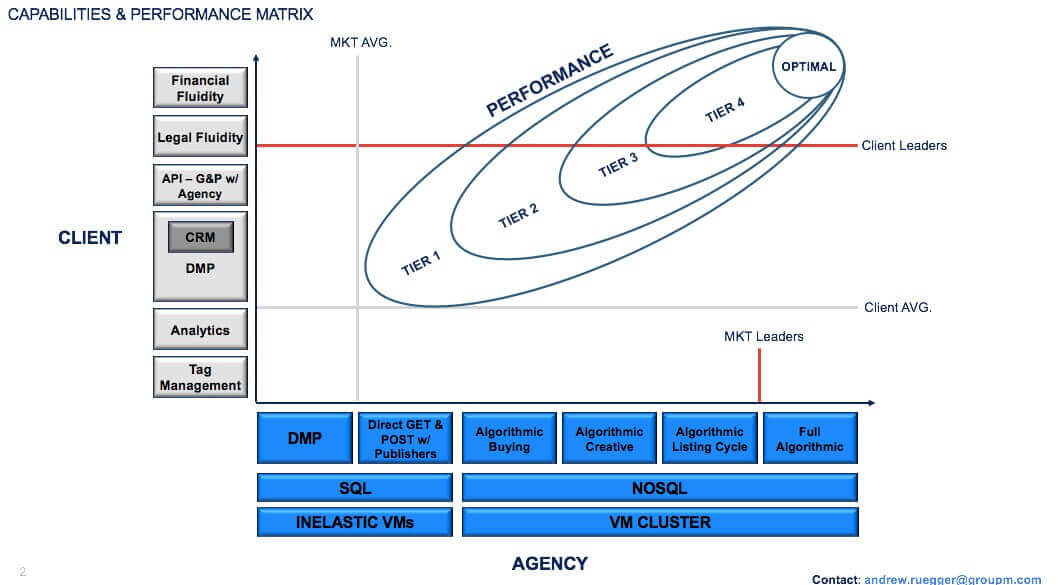

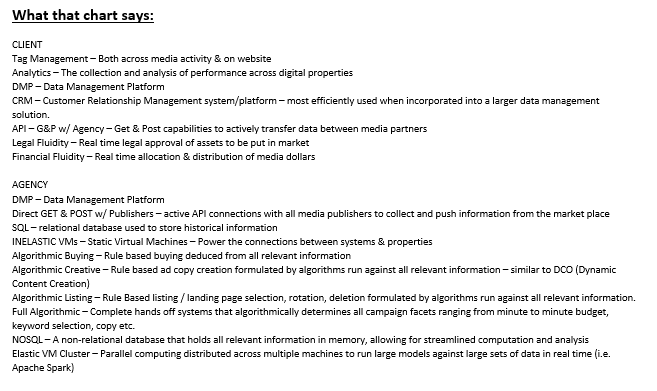

I have been conceptually explaining the future of SEM in the following way:

The future of search performance is a function of an agency’s technological modeling capabilities, which ultimately becomes a function of client capabilities:

P(Ac(Cc))

Performance (Agency Capabilities (Client Capabilities))

Hypothetically, if a client has the ability to move money around from channel to channel, and the ability to approve copy in real time, there is no real advantage in performance until the agency can algorithmically create content, rotate listings (landing pages) or have full algorithmic buying capabilities across channels.

Conversely, if an agency can run campaigns in an entirely algorithmic way, but the clients do not manage their data correctly, properly segment audiences, have active data transfer or have the ability to approve creative changes on the fly, there is no performance gain for those clients. The agency still maintains a cost of business advantage but cannot exceed a performance threshold.

This type of setup really applies to managing all digital channels algorithmically, and without this type of system relationship, real-time buying and attribution are not possible. However, the more complicated the industry, the more the complexity is increased by the media mix and the amount of information or number of systems that need to communicate.

Barriers in the search industry: bid management platforms and AdWords scripting

AdWords scripting, although powerful, has significant limitations. For example, there is a reference refresh rate, character limit, data absorption criteria and overall script limit per account. In short, Google is not currently set up for processing unlimited scripting — nor should it be, because the industry demand simply is not there yet. Even if there were no limits, an environment based on JavaScript may make it very difficult to perform the necessary operations it would take for you to get to a fully algorithmic campaign execution in all cases.

Search BMPs, which are great for some objectives, are also not yet set up for fully algorithmic buying. Industry leaders such as Kenshoo and Adobe have started to add impressive modeling capabilities and great functionality; however, there are limitations to what you can achieve. Currently, the BMPs have a one-day delay in posts to publishers, a delay in capabilities in development functions (new buy formats/capabilities) and limited active APIs that allow open real-time communication. Although they all appear to be moving that way, it’s a tall order to fill given the diversity of media mixes across their client base, new and emerging channels and an ever-growing ad tech industry.

Algorithmic/programmatic buying

I think it’s first important to note that there are already many algorithmic processes in the publisher UI, BMPs and AdWords scripting. One example is the binary logic of “off or on,” used for basic tasks such as keyword bidding for CPC management:

If cost[keyword1] > x, then status[keyword1] = disable else active

The more advanced rules, like Optimize to Conversion, are using similar yes-or-no logic, but with an additional series of rules linked together across multiple metrics, keywords, ad groups and so on. In this case, using AdWords metrics Value/Conversion and Cost/Conversion, it would look something like this:

If [[(value/conversion)[keyword1]] / [(cost/conversion)[keyword1]]] > [[(value/conversion)[keyword2]] / [(cost/conversion)[keyword2]]] then maxBid[keyword1] = maxBid[keyword2] + %valuedifference else maxBid[keyword2] = maxBid[keyword1] + %valuedifference

AdWords scripting allows you to create programmatic rules that either do not deal with in-campaign data or are too complex for binary/nested binary logic, e.g., referencing a third-party JSON feed based on sports scores that you want to incorporate into your ad copy.

No matter how clever you may get, even an expert at AdWords scripts will tell you that you cannot automate the full depth of the buying process in the mentioned environments for a variety of reasons, including several types of rate limits, the lack of proper information to script against, cross-engine implementation and so forth.

Overcoming the obstacles

The first step is to establish a direct connection method to the publishers (Google, Bing, Yahoo) via API. Then you will need a developer’s API key for each, which allows you to access your linked accounts’ data as frequently as you would like and instruct the publishers to augment any elements of your accounts/campaigns that you find in their UIs.

Side note: If you ever wondered how products like Marin, Kenshoo and DS3 work, they all have these connections, link your accounts to their developer API keys and require properly formatted URL parameters so their systems link publishers and understand what is happening. Then, a search marketer makes choices through their user interface that tells their back-end systems to communicate with the publishers via API connections to adjust your campaigns.

Once you can ask the publishers the current state of a campaign (including all dimensions and metrics you would like to see) and programmatically tell them what to do with your campaigns — in other words, if your systems know all of the right things to say — you could be fully algorithmic. As a search marketer, the hard part is obviously figuring out the right things to do within the market based on a vast number of inputs: research, trends, what your clients want, what the greater media team wants, massive budget changes and so on. It becomes conceptually difficult to imagine how all of those things could be done without an expert search marketer at the helm.

I would encourage you to make a list of all the things you would need to know, all the things you would need to change and how you would change them. By doing so, you have essentially just engineered the basic code necessary to make your paid search fully algorithmic.

At this point, you may think to yourself, “Doing all of this would take more time and effort than it would to just manage them myself,” and in many cases, you would be right — unless your company has invested in owning technology and developing machine learning capabilities.

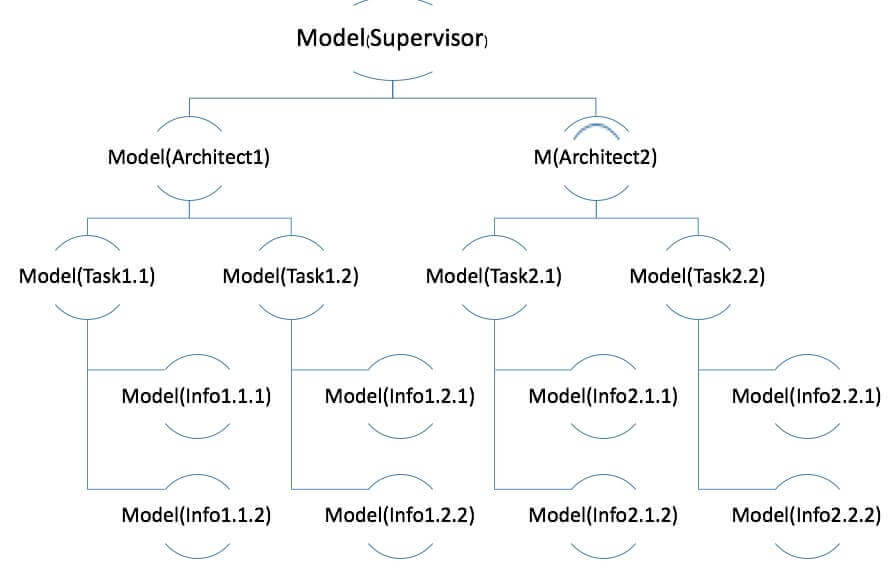

The only way we have seen this work correctly at scale is through machine learning. Typically, an ensemble of decision trees, also known as random forest models, produces the best results for us in search. Random forest is simply a bunch of decision trees working together to make classifications or conclusions. (I suggest you investigate the five major schools of machine learning if this subject interests you, as each model type has its own strengths and weaknesses.)

While it may be possible to use a single model to manage a complex campaign from construction to execution, it’s highly unlikely, and ultimately, more of a semantic debate. Instead, it’s best to chunk out tasks for different models, including bid optimization, creative selection/rotation, account structure, negative keywords and so on.

I also recommend that you have some operator structures connecting them where the supervisor algorithm merges the processes of each chunk to implement them in a coherent way. In theory, you could expose the model information from each chunk and have AdWords scripts mange the models, but that would obviously be specific to Google.

A small-sized account with a handful of campaigns requires about a dozen models, while a large account can take up to several thousand.

For example, for one client’s account, we see over 10 million unique keywords through broad match each year, and flagging all negatives was time-consuming. So we trained a random forest model to do it for us — based on the input from the client’s legal “do not use” book and eight million flagged keywords from past years — which was able to correctly classify 99.9 percent of all incoming keywords. The model has over 45,000 decision points, or branches.

Ideally, the only input in any marketing campaign is spend, with the solitary goal or objective being return on spend. Branding, email signups, downloads and so on are all subsets of return — hence why a business is called a business and not a non-profit. After you start stitching modeled systems from every channel together, it becomes possible to do accurate, real-time attribution and build full media optimization machines (including search).

Realistically, the data just does not exist in a structured or connected way for the majority of companies, which makes real-time or time-delayed attribution difficult. Given that system is immensely complex, it should not be a surprise that attribution continues to be a highly touted and debated subject in the marketing industry.

Machine learning works by analyzing past variables and outcomes to predict new ones. As of now, anything that is new and relevant needs to be added to the setup of variables a model is trained on. Determining those variables and properly interpreting coefficients will always require a subject matter expert.

Opinions expressed in this article are those of the guest author and not necessarily MarTech. Staff authors are listed here.

Related stories